Leveraging AI in Finance Industry: Advanced Portfolio Management System

Overview

Introduction

In today's fast-paced financial landscape, businesses require sophisticated tools to navigate market complexities and make informed investment decisions. Our advanced portfolio management system harnesses the power of large language models (LLMs) to elevate business performance by integrating traditional financial data processing with AI-driven insights. This innovative approach not only enhances decision-making but also provides personalized investment strategies tailored to individual user profiles, ensuring adaptability in a dynamic economic environment.

By leveraging cutting-edge technology, the system aims to provide a robust framework for managing diverse portfolios while addressing challenges such as data accuracy, timely insights, and user-centric recommendations.

Objectives

The primary objectives of the Advanced Portfolio Management System include:

- Facilitation of real-time data ingestion and analysis for timely decision-making.

- Enhanced user engagement through personalized investment strategies.

- Integration of quantitative analytics with qualitative insights derived from LLMs.

- Continuous learning and adaptation to individual user behavior and market dynamics.

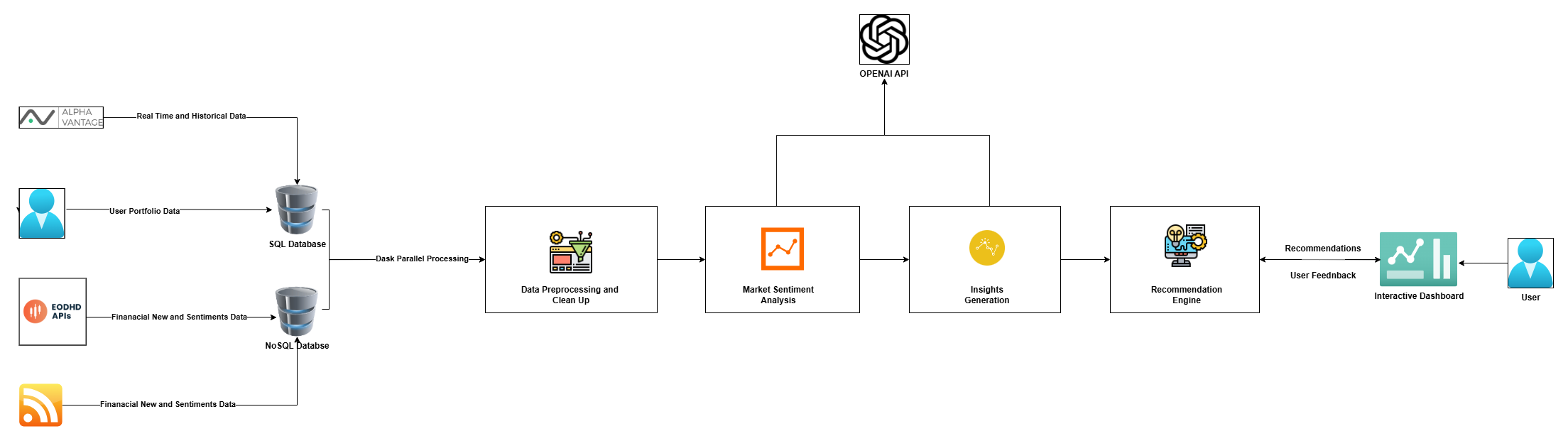

System Architecture

Key Components

Data Ingestion Layer

The Data Ingestion Layer is responsible for collecting real-time market data, financial news, and user portfolio information from various sources such as APIs, RSS feeds, and web scraping. It ensures that the system has access to the most current and relevant data for analysis. The layer also includes mechanisms for data validation to filter out redundant or inaccurate data.

Key Functions:

- Real-time data fetching from multiple financial sources.

- Data validation and cleaning to maintain data quality.

- Scheduled data updates using automated scripts.

Data Storage

The ingested data is stored in structured and scalable databases, such as relational databases (SQL) and NoSQL databases for unstructured data. This component is designed to handle large volumes of data while ensuring data integrity and security.

Key Functions:

- Efficient data storage facilitating rapid read/write operations.

- Data indexing and partitioning for quick access.

- Archiving historical data for predictive analysis.

Data Processing Layer

This layer processes and cleans the ingested data, preparing it for analysis. It includes data normalization, error correction, and transformation to ensure that the data is in a usable format.

Key Functions:

- Outlier detection and missing value handling.

- Feature engineering to derive insights from raw data.

- Dimensionality reduction for computational efficiency.

LLM Integration Module

Utilizing advanced large language models, this module analyzes textual data, such as financial news, regulatory reports, and market sentiment, to extract qualitative insights. The LLMs are trained on financial datasets, ensuring they understand industry-specific jargon and context.

Key Functions:

- Sentiment analysis and entity recognition from textual sources.

- Contextual language understanding for more accurate predictions.

- Fine-tuning capabilities to adapt to changing market conditions.

Insight Generation

This component synthesizes processed quantitative data and LLM-derived insights to generate actionable insights for users. It employs advanced analytical methods to identify market trends, investment opportunities, and potential risks.

Key Functions:

- Time-series analysis and clustering for deeper insights.

- Generation of actionable reports and visualizations.

- Scenario simulations and heatmaps to visualize trends.

Recommendation Engine

Based on insights generated, this engine formulates personalized investment strategies tailored to individual user profiles. It adapts recommendations based on user feedback, ensuring alignment with user-defined risk tolerances and financial goals.

Key Functions:

- Use of collaborative filtering for personalized insights.

- Reinforcement learning algorithms to adapt to user behavior.

- Risk assessment tools for optimal portfolio alignment.

User Interface/API

The User Interface/API is the gateway through which users interact with the system, accessing personalized recommendations and insights.

Key Functions:

- Intuitive and user-friendly dashboards with real-time notifications.

- Customizable views for individual user preferences.

- API integration for third-party application compatibility.

Flow Description

- Data Ingestion: The Data Ingestion Layer gathers diverse data sources and ensures up-to-date information.

- Data Storage: The ingested data is securely stored, indexed, and archived for efficient access.

- Data Processing: Data is cleaned and prepared, applying advanced preprocessing techniques.

- LLM Analysis: Textual data from financial news and reports is analyzed for sentiment and qualitative factors.

- Insight Generation: Synthesized insights are visualized for user comprehension.

- Personalized Recommendations: The Recommendation Engine formulates tailored strategies, integrating user feedback for continuous adaptation.

- User Interaction: Insights and recommendations are delivered through an accessible interface, engaging users for improved decision-making.

Technical Implementations

Data Ingestion Layer

- Technologies Used: pandas, requests, BeautifulSoup, yfinance

- Real-time data fetching is implemented using APIs like Alpha Vantage and web scraping libraries. Automated scripts schedule data collection to reflect current market conditions.

Data Storage

- Technologies Used: PostgreSQL, MongoDB, SQLAlchemy

- Structured data is stored in PostgreSQL, while unstructured data resides in MongoDB. Data pipelines are established through pySpark for enhanced scalability.

Data Processing Layer

- Technologies Used: numpy, scikit-learn, dask

- Data cleaning workflows utilize libraries to perform imputation, normalization, and outlier detection. Dask is employed for parallel processing of large datasets.

LLM Integration Module

- Technologies Used: Hugging Face Transformers, OpenAI API

- Analysis of textual data leverages models like GPT-4, with fine-tuning for domain-specific insights and adaptive modeling for changing contexts.

Insight Generation

- Technologies Used: matplotlib, seaborn, statsmodels

- Financial models with regression analyses are created, complemented by visualizations to present findings effectively.

Recommendation Engine

- Technologies Used: TensorFlow, PyTorch, XGBoost

- Recommendations are formed using machine learning algorithms, validated through backtesting for robustness.

User Interface/API

- Technologies Used: FastAPI, Streamlit, React

- RESTful APIs are created for real-time updates, and the user interface is designed with responsive dashboards to enhance engagement.

Use Cases

- Individual Investors: Personalized recommendations based on their risk profile and investment behaviors.

- Wealth Management Firms: Enhanced portfolio insights to improve client advisory services.

- Institutional Investors: Enhanced data analysis capabilities to support large-scale portfolio management strategies.

Future Directions

- Integration of Alternative Data Sources: Exploring new data streams such as social media trends and blockchain data.

- Real-Time Market Adaptation: Using ongoing LLM feedback to refine investment strategies in real-time.

- Broadening Risk Assessment Methods: Incorporating advanced stochastic models to enhance risk evaluation.

Conclusion

This architecture represents a comprehensive and scalable approach to portfolio management, integrating traditional data analysis with advanced AI capabilities. By leveraging large language models, our system enhances decision-making processes, empowering businesses to navigate market complexities, and ultimately elevating their investment strategies.

This innovative framework positions users to achieve optimal financial outcomes while adapting to the ever-evolving financial landscape. With continuous updates and enhancements, this system remains at the forefront of portfolio management technology.

References

- [1] "Machine Learning for Asset Managers" by Marcos López de Prado.

- [2] "Statistical Arbitrage in Quantitative Finance" by Andriy Ermilov.

- [3] "Deep Learning for Finance: Deep Reinforcement Learning" by Marco Berti.

- [4] Hugging Face documentation: https://huggingface.co/docs

- [5] TensorFlow Extended (TFX) documentation: https://www.tensorflow.org/tfx